A Blueprint for Modern API Development: Repositories developers want to work on

Introduction

Pageripper is a commercial API that extracts data from webpages, even if they're rendered with Javascript.

In this post, I'll detail the Continuous Integration and Continuous Delivery (CI/CD) automations I've configured via GitHub Actions for my Pageripper project, explain how they work and why they make working Pageripper delightful (and fast).

Why care about developer experience?

Working on well-automated repositories is delightful.

Focusing on the logic and UX of my changes allows me to do my best work, while the repository handles the tedium of running tests and publishing releases.

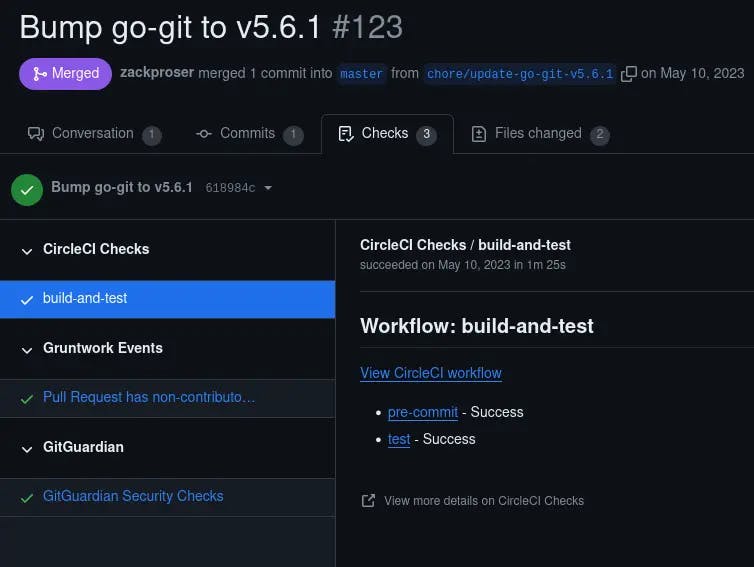

At Gruntwork.io, we published git-xargs, a tool for multiplexing changes across many repositories simultaneously.

Working on this project was a delight, because we spent the time to implement an excellent CI/CD pipeline that handled running tests and publishing releases.

As a result, reviewing and merging pull requests, adding new features and fixing bugs was significantly snappier, and felt easier to do.

So, why should you care about developer experience? Let's consider what happens when it's a mess...

What happens when your developer experience sucks

I've seen work slow to a crawl because the repositories were a mess: long-running tests that took over 45 minutes to complete a run and that were flaky.

Even well-intentioned and experienced developers experience a slow-down effect when dealing with repositories that lack CI/CD or have problematic, flaky builds and ultimately untrustable pipelines.

Taking the time to correctly set up your repositories up front is a case of slowing down to go faster. Ultimately, it's a matter of project velocity.

Developer time is limited and expensive, so making sure the path is clear for the production line is critical to success.

What are CI/CD automations?

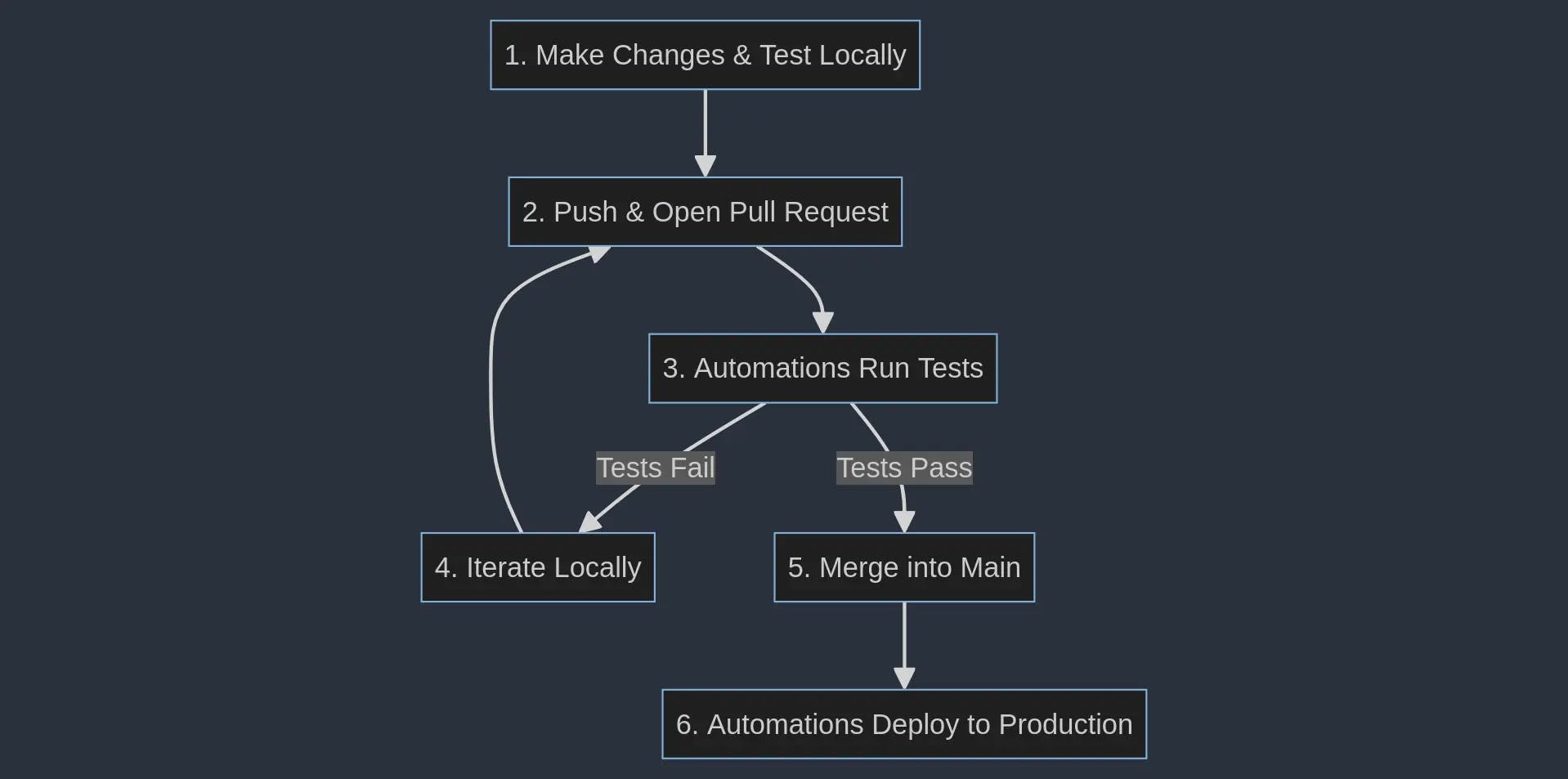

Continuous Integration is about constantly merging into your project verified and tested units of incremental value.

You add a new feature, test it locally and then push it up on a branch and open a pull request.

Without needing to do anything else, the automation workflows kick in and run the projects tests for you, verifying you haven't broken anything.

If the tests pass, you merge them in, which prompts more automation to deploy your latest code to production.

In this way, developers get to focus on logic, features, UX and doing the right thing from a code perspective. The pipeline instruments the guardrails that everyone needs in order to move very quickly.

And that's what this is all about at the end of the day. Mature pipelines allow you to move faster. Safety begets speed.

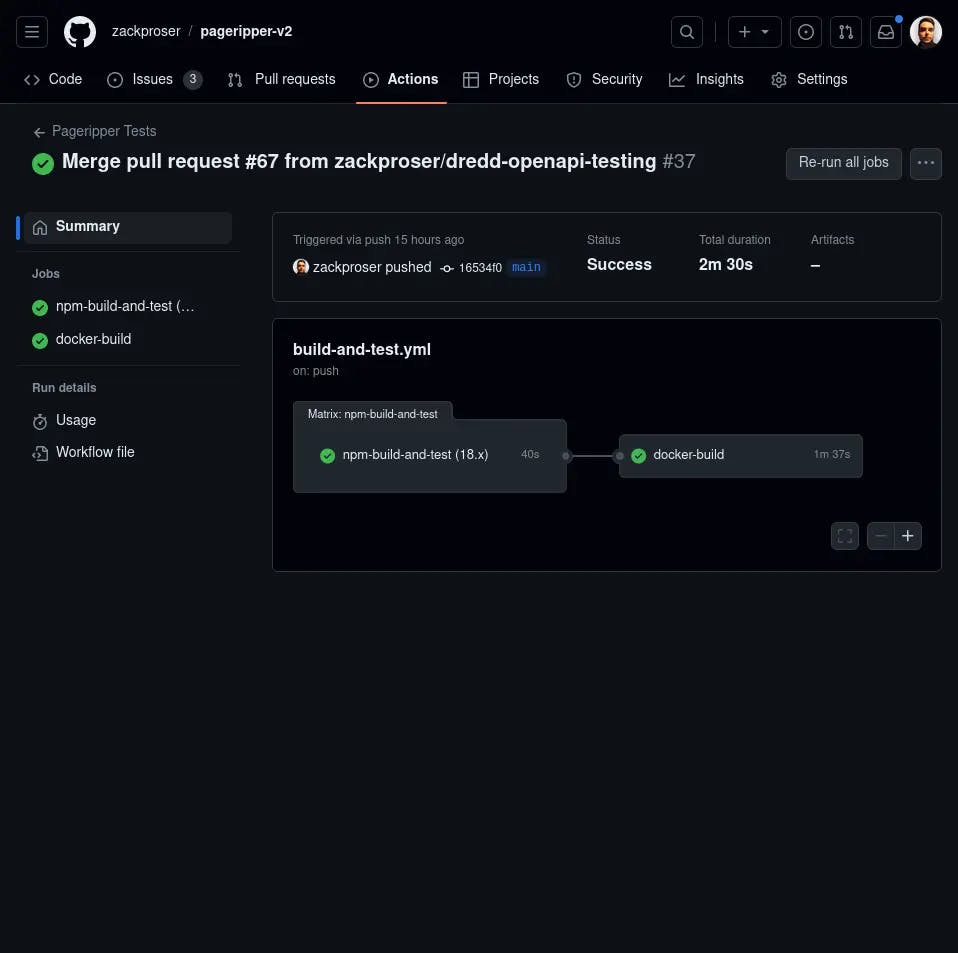

Pageripper's automations

Let's take a look at the workflows I've configured for Pageripper.

On pull request

Jest unit tests are run

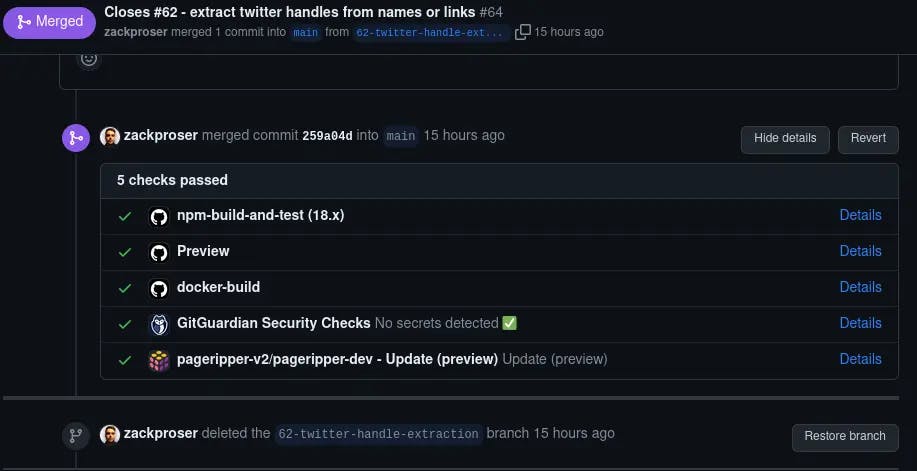

Tests run on every pull request, and tests run quickly. Unit tests are defined in jest.

Developers get feedback on their changes in a minute or less, tightening the overall iteration cycle.

npm build

It's possible for your unit tests to pass but your application build to still fail due to any number of things: from dependency issues to incorrect configurations and more.

For that reason, whenever tests are run, the workflow also runs an npm build to ensure the application builds successfully.

docker build

The Pageripper API is Dockerized because it's running on AWS Elastic Container Service (ECS). Because Pageripper uses Puppeteer, which uses Chromium or an installation of the Chrome browser, building the Docker image is a bit involved and also takes a while.

I want to know immediately if the build is broken, so if and only if the tests all pass, then a test docker build is done via GitHub actions as well.

OpenAPI spec validation

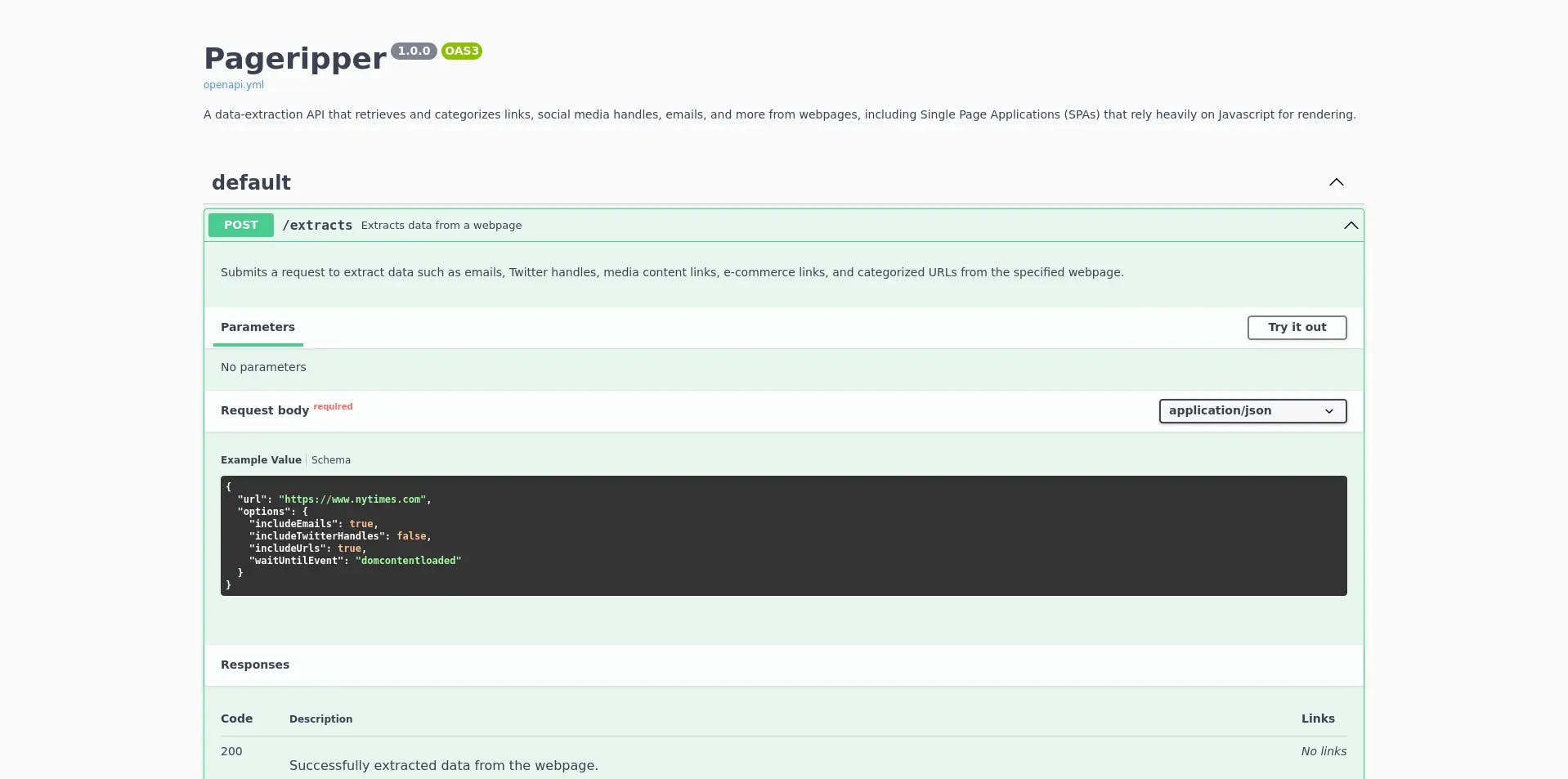

For consistency and the many downstream benefits (documentation and SDK generation, for example), I maintain an OpenAPI spec for Pageripper.

On every pull request, this spec is validated to ensure no changes or typos broke anything.

This spec is used for a couple of things:

- Generating the Swagger UI for the API documentation that is hosted on GitHub pages and integrated with the repository

- Generating the test requests and the documentation and examples on RapidAPI, where Pageripper is listed

- Running dredd to validate that the API correctly implements the spec

Pulumi preview

Pageripper uses Pulumi and Infrastructure as Code (IaC) to manage not just the packaging of the application into a Docker container, but the orchestration of all other supporting infrastructure and AWS resources that comprise a functioning production API service.

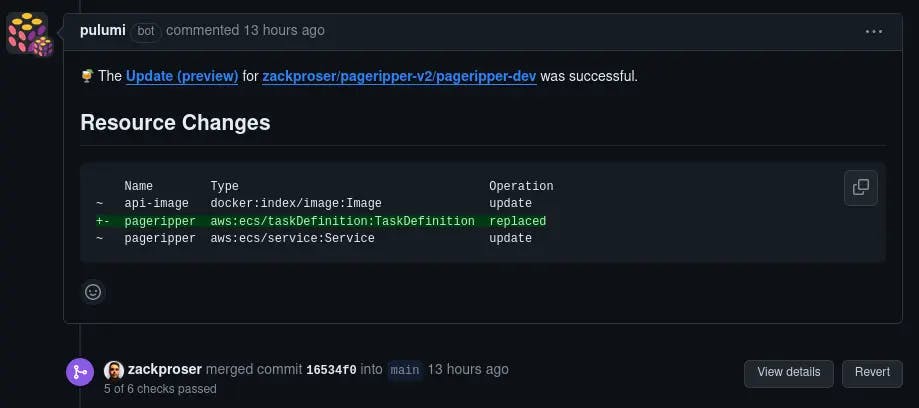

This means that on every pull request we can run pulumi preview to get a delta of the changes that Pulumi will make to our AWS account on the next deployment.

To further reduce friction, I've configured the Pulumi GitHub application to run on my repository, so that the output of pulumi preview can be added directly to my pull request as a comment:

On merge to main

OpenAPI spec is automatically published

A workflow converts the latest OpenAPI spec into a Swagger UI site that details the various API endpoints, and expected request and response format:

Pulumi deployment to AWS

The latest changes are deployed to AWS via the pulumi update command. This means that what's at the HEAD of the repository's main branch is what's in production at any given time.

This also means that developers never need to:

- Worry about maintaining the credentials for deployments themselves

- Worry about maintaining the deployment pipeline themselves via scripts

- Worry about their team members being able to follow the same deployment process

- Worry about scheduling deployments for certain days of the week - they can deploy multiple times a day, with confidence

Thanks for reading

If you're interested in automating more of your API development lifecycle, have a look at the workflows in the Pageripper repository.

And if you need help configuring CI/CD for the ultimate velocity and developer producitvity, feel free to reach out!